AItect2022

AItect:Can AI make designs ?

Architectural Intelligence / Artificial Intelligence

INDUCTION DESIGN / ALGOrithmic Design

pBM: project Beautiful Mind: Interactive Form Design Assist Program

Architectural Intelligence / Artificial Intelligence

- INDUCTION DESIGN

- (ID)

- ALGOrithmic Design

- (AD)

- Architectural Intelligence

- (AI)

- AItect :

- project Beautiful Mind

- (pBM)

- project Hand in Hand

- (HandIn)

(Translation: partly by a translator and partly by AI+Author collaboration)

- INDUCTION DESIGN(1990)

- SUBWAY STATION / IIDABASHI(2000)

- ALGOrithmic Design(2001 - )

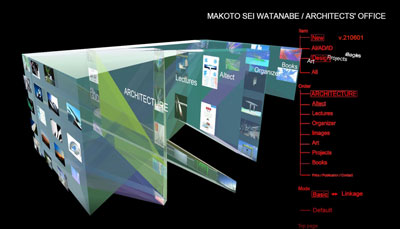

- WEB FRAME -Ⅱ(2011)

- ALGODEX(2012)

- ALGODeQ (international programming competition)(2013 / 14)

- AItect(2022)

- pBM project Beautiful Mind2024

- SUBWAY STATION / IIDABASHI - ventilation tower(2000)

- KeiRiki program series(2003 - )

- ShinMinamata MON(2005)

- AItect(2022)

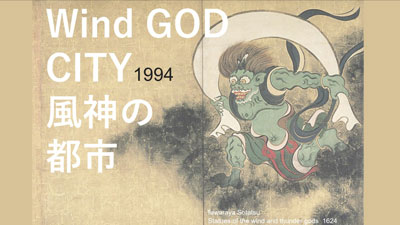

ID 1994-

Instead of design, generation

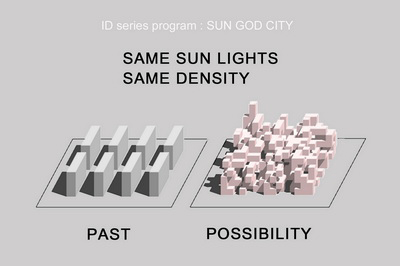

Can architecture and cities be generated according to required conditions instead of designed with traditional methods?

In 1994, struck by that concept, I created the first programs to enable it – the series of programs called INDUCTION DESIGN (ID).

Here, “conditions” means the various elements of the plans that make good cities and architecture possible. To begin, I selected sufficient light, pleasant breezes, and efficiency, together with streets that are a pleasure to walk on, an appropriately rising and falling topography, and various functions laid out in optimum relationships. Then I began working on computer programs to generate cities that would better fulfill these conditions.

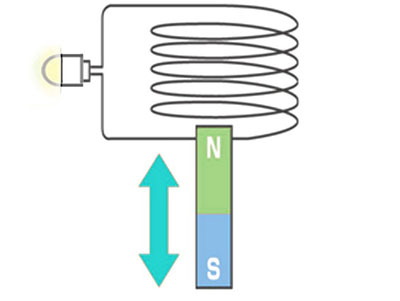

Rather than specifying forms and layouts through direct operations, these programs obtained their results by operating on the conditions. This resembles the electromagnetic induction of physics, so I called the series Induction Design.

A key point is the difference between ID and using the computer to create forms and plans. The aim of ID is to obtain the plan, environment, and structure required of architecture by obtaining a configuration, layout, and form that solve the project’s various conditions. Rather than human creations, these are solutions that best meet the conditions. They are “better” solutions. Therefore, although creating variations by adjusting parameters is important, the variations are not the objective. ID seeks correct solutions, not a large number of candidates.

In 2001, WEB FRAME (Subway Station IIDABASHI on the Oedo line) became the first work of architecture in the world to be generated with this method – solving required conditions by program – and actually built.

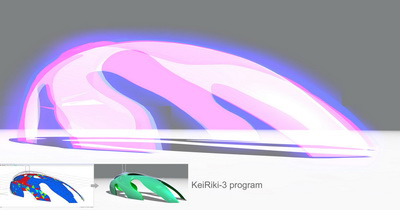

This was followed by the KeiRiki series, which took structural mechanical conditions and, while maintaining the architect’s intended form, generated the lightest structure. In 2004, the KeiRiki-1 program was used to complete the Shin-Minamata Mon project.

Nowadays, similar functions of the KeiRiki series, called generative design, etc., are becoming standard equipment in commercial CAD software.

The KeiRiki series is a much earlier pioneer of such "structural aptimization" software.

(The term "optimal" is inappropriate here as there is more than one solution.

The answer obtained is a solution that fits the specific parameters of the task to a "high degree", and it may not be the only "best" solution to the task)

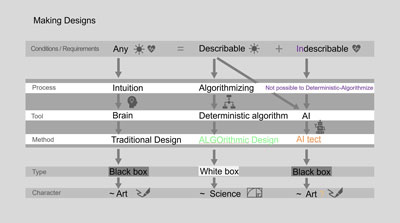

These series of attempts, including other instances, are called "ALGOrithmic Design (AD)".

AD 2001-

Externalize what occurs in the architect’s mind

Both ID and AD take judgments that are made in the architect’s mind, externalize them, and write them down.

Architects are normally not conscious of the mental processes they use to arrive at judgments and selections. After conducting various studies, they make these decisions intuitively.

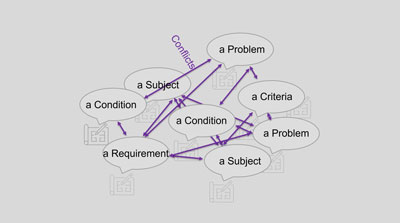

However, there are some challenges that the head, or human brain, cannot solve.

When there are interrelationships between elements, it is difficult to picture in our minds the best relationship between them.

Assuming that your interests and theirs are in conflict, consider a situation where both sides can agree.

If it is between the two of you, you might be able to come up with a solution.

Now, what if there are three or four more members, and a hundred people are in conflict with each other, or in mutual interest?

That would be unmanageable.

You would have to give up consideration somewhere appropriate and choose the idea in front of you, saying that this is enough. That decision may have missed a better decision that should be out there somewhere. But we cannot even check to see if the "better idea" really exists.

This is a limitation of the brain's processing power.

However, a computer program can find the "much better" plan in these intertwined relationships.

As they are conflicting/mutualizing, there is no plan that will satisfy everyone perfectly.

But the plan with the fewest complaints from everyone can be found.

That is the " aptimized” solution.

The human brain cannot do that.

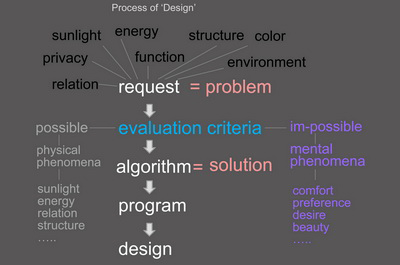

ID and AD are attempts to write down the judgment and selection processes as algorithms.

If these processes can be written as algorithms, they can be translated into computer programs. And if programs can be written, they can be used to generate architecture.

Therefore, the core of this method is externalization of algorithms.

To externalize algorithms, standards of judgment need to be set. It is necessary to define what is good and what is bad.

It is essential to decide what makes a good street, what makes a good disposition of functions, what makes a good XXX.

It is not hard to define “good” for physical phenomena like temperature or the amount of sunlight or wind. It is also possible to determine good and bad for function dispositions and volumes. But conditions related to human feelings and preferences are difficult to define in this way.

Good and bad can be defined when an underlying framework is accepted.

But preferences cannot be defined. Everyone is different from others, and different from themselves from day to day. Even if expressions were read and brain waves measured with a Brain-Machine Interface, tomorrow might bring a different result.

Standard values cannot be defined in the realm of preferences. Algorithms cannot be obtained without defined values, and programs cannot be written without algorithms. AD becomes impossible.

So what should be done?

AIdesign 2001-

Algorithms of preferences

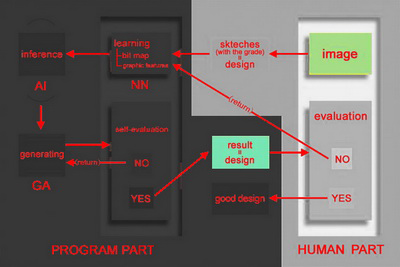

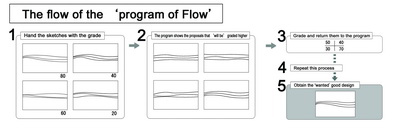

This challenge was taken up in 2001 by the Program of Flow.

This was developed to allow forms thought to be good by the architect to be obtained without writing down algorithms.

Architect aims are achieved through a dialogue with the program. The architect makes multiple sketches, scores them, and passes them to the program. The program reads the drawings and produces what it thinks is a good form. This is scored by the architect and returned to the program. The idea is that the architect’s desired form will be display after a certain number of repetitions of this process.

It might be said, correctly, that this could be done by hand. Every architect worthy of the name can draw good forms by hand.

It might also be said that the form of architecture is decided by making overall judgments of various requirements, and not by drawing good lines. That is also true. Before deciding on a form, studies are needed of functions, the environment, structure, and history.

But while conceding all of that, “good form” was still selected as a theme, because I think that this is the realm least suited to computer programs. If it is the most difficult, then there is value in taking up the challenge. Let’s try it.

Another reason for isolating the work of drawing forms from the integrated work of design is that this would allow other conditions to be incorporated. This is because values can be defined for many other conditions. If a value can be decided, then an algorithm to achieve it can be created, meaning that it can be programmed. The idea is that if it were possible to develop algorithms for this impossible theme – good forms – then it should be possible to develop them for other conditions.

Here, “good forms” has a number of different meanings.

To one architect, it may mean simply beautiful forms. Another architect may require that designs be astonishing, or even disturbing. In the same way that bad scents need to be mixed into certain good scents.

“Good” means different things to different architects. There is no such thing as an absolutely good form. There are as many good forms as there are architects and users. What kind of method could generate such individually different “good forms” without algorithms?

The 2001 Program of Flow was an attempt to realize such a method. Combining a neural network with genetic algorithms, it could be called AI.

In 2004, this program was used to complete the Tsukuba Express Kashiwanoha Campus Station, which is configured from 3D curved-surface unit panels. It can be called the first work of architecture in the world to have used AI to generate architecture by solving required conditions.

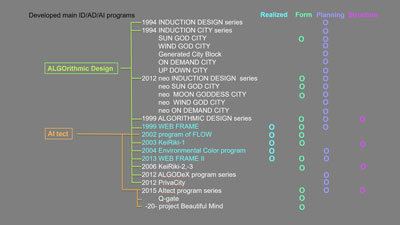

ID/AD/AI - World firsts

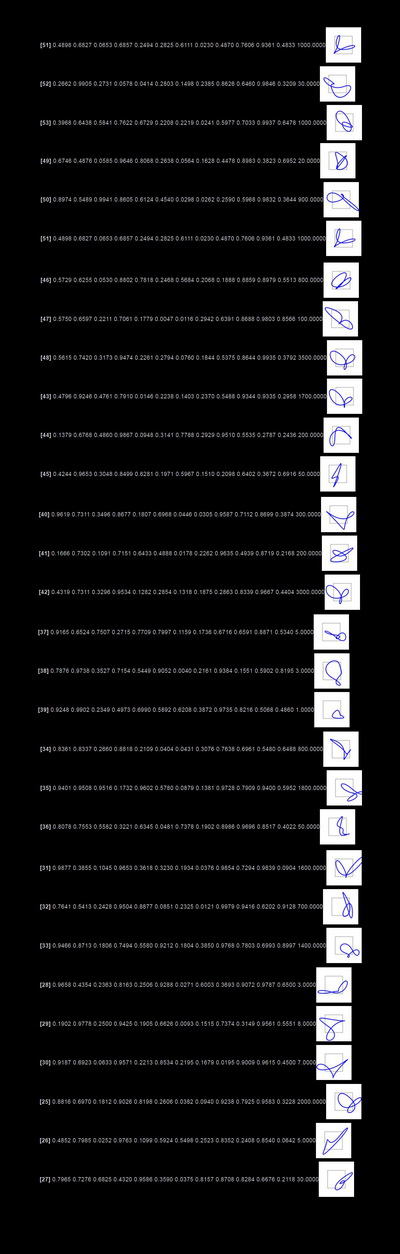

→ List of major programs researched/developed to date

Sections marked "Realized" in blue indicate that the program was used to design and complete the actual architecture.

→ Program-generated realized architecture

The following are considered to be "world firsts" in each of these areas.

Programmatically Generation (ALGorithmic Design):

WEB FRAME → "WEB FRAME" at Subway Station Iidabashi on the Toei Subway Oedo Line, completed in 2000

The world's first architecture actually built using a program that solves requirements and generates form/composition.

Structural Aptimization (ALGorithmic Design):

The KeiRiki series → Shin Minamata MON (New Minamata Gate), completed in 2005

Pioneer of the current semi-standard CAD function "structural aptimization"

(Commonly known as "generative design" in today's commercial software)

Generation by AI (AItect)::

Program of Flow → TX Kashiwanoha-Campus Station, completed in 2005

The world's first architecture actually constructed using AI to solve requirements and generate form/composition.

AItect 2015 –

Will a super architect emerge? / pBM – project Beautiful Mind

The Tsukuba Express Kashiwanoha-Campus Station was completed as the world’s first AI-generated architecture. But the performance of the Program of Flow that was developed for this project did not reach the expected level. In the actual design, the program’s results were finally adjusted manually. In the end, the AI program was no match for human hands.

Then, in 2015, a new project was begun to inherit this concept – pBM: project Beautiful Mind.

The concept underlying pBM is the same as Program of Flow. Via a dialogue with the program, the architect obtains a form thought to be beautiful. Again, “beautiful” varies with different architects. Handsome, awesome, never seen anything like it, disturbing, kawaii,... which is important depends on the architect.

However, the technology employed reflects 14 years of progress.

pBM starts by returning to the simplest form – the triangle. The program begins by displaying your favorite triangle. Favorite triangle? Yes, most people probably do like some triangles better than others. This program achieved generally good results.

Next was Bézier curves, which also yielded good results. Currently, pBM is targeting 3D shapes. This is still in progress, but if it goes well then it will probably become possible to embed more complex shapes and conditions other than shapes.

pBM is a project that grows and evolves.

pBM aims at the emergence of the architect through AI, or in other words AI architect → AItect. pBM can also be called a project of collaboration between intuition and AI.

This series of conceptual perspectives – ID, AD, AItect – is an intellectual experiment in the realm of architecture. It could be called AI, for Architectural Intelligence.

The word AItect encompasses two meanings: Architectural Intelligence and Artificial Intelligence.

pBM project Beautiful Mind 2020 –

Objectives and targets

The pBM is an ongoing project as of 2020.

The pBM as of this section provides effective solutions to some extent, but it is not yet sufficiently satisfactory. We are working on trials and improvements (and in some cases innovations) in the following areas: development of the original AI, improvement of the UI, and a system for linking with CG software.

The goal of pBM is for the program to present the form (potentially) desired by the designer (i.e., beautiful form) in three-dimensional geometry.

Of course, there are many other conditions in architectural design besides the form. The objective at this stage is to prove that the desired answer can be obtained when only the condition of form is selected from among the various conditions.

The reason why only one condition is selected among the various conditions is because the program cannot satisfy multiple conditions if it cannot meet even a single requirement.

If the program can solve the single condition called "morphology", then it can be expanded to deal with many different conditions.

To achieve many things, start from one thing.

Features

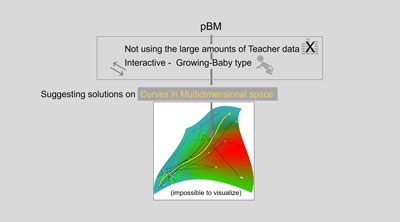

pBM_AI has the following features.

1- Does not require teacher data.

2- Proceeding in an interactive way with humans.

3- Gradually (to some extent) grow.

Many AIs require a huge amount of teacher data.

However, when trying to do a specific design, it is not practical to prepare a large number of teacher data in advance.

Even if the images and data of all the works of that designer are read and used as teacher data, the amount of teacher data will not be sufficient. Besides, it is impossible to create new designs if they are pulled by past trends.

An AI that does not use teacher data is required.

In moreover, since the user does not know himself/herself what he/she is seeking, the process of operation must be a dialogue between the user and the AI. Therefore, the operation process has to be interactive.

And because we start without any teacher data, it is essential to have a system that enables the AI to gradually learn and increase its effectiveness.

There is another feature.

4- No evaluation criteria are defined.

This is a big difference from the ALGOrithmic Design.

In ALGOrithmic Design, the evaluation criteria need to be determined in advance. Otherwise, the algorithm cannot be composed. We need to determine what is good and where the boundaries are.

Even for an AI to distinguish between, say, a human face and a stain on a wall, it would need a consistent criterion that could distinguish between the two. It may be a criterion that humans can understand, such as the ratio of eyes/nose/mouth, or may be a criterion that the AI has learned on its own and is not recognizable to humans.

But with pBM, we do not decide if it's good or bad.

It is the users themselves who decide/choose it. The user determines the value each time.

In the process of interacting with the user, the AI "learns" what is good or bad. ("Learning" in this context is a figurative term)

It is you, on every trial, who decides whether it is good or bad.

The criteria for judging is different for each person, and the same person often changes his/her mind.

The beauty for you may change from yesterday to today.

Even under such circumstances, pBM should be able to provide answers.

And trying to do exactly that is what makes the development of pBM not an easy thing to do.

Performance

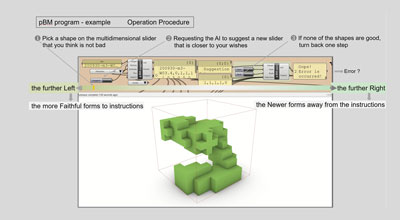

The UI of the pBM program is simple.

First, a form that is generated from a lot of parameters using standard 3DCG is prepared.

The combination of all parameters is enormous, so it is quite difficult (almost impossible) to find a "good form" by hand, changing parameters one by one.

Then this CG is adjusted to connect with pBM. The reason why commercial CG is chosen is to increase the versatility of pBM.

The AI itself is in our server and the pBM is operated via web.

The program presents the user with a single parameter (i.e. a slider).

The user moves this single slider to find a form that he/she thinks is "not bad".

When he/she finds it, they click the Suggest button. Then the program presents the next slider.

From then on, the process is repeated.

It is easy to do. If he/she does not like it, there is a back button.

This slider looks like a straight line, but it is actually a multidimensional curve in a multidimensional space.

The AI chooses and presents a multidimensional curve that seems more plausible based on the points in multidimensional space chosen by the user.

This selection is the key to pBM_AI.

What the AI presents is not a "candidate itself" but a "single parameter correlated with multiple parameters" necessary for morphogenesis.

The slider presented differs in character on its left and right sides, with the left side being relatively faithful to user instructions and the right side moving further away from them.

This is to avoid a situation where the solutions converge in a similar style due to presenting forms that are close to the instructions.

If there is no unexpected development that betrays the instructions, it is not interesting.

On the other hand, if there is only a betrayal, it is no better than a random variation.

Loyalty and deviance, the "right" balance is necessary.

The pBM is also trying to incorporate the "Monkey jump effect" shown in the next section to some extent.

Difficulties in AD/AItect

Monkey jump effect

If the design conditions can be written down, the process of solving the problem can be made into an algorithm, so " ALGOrithmic Design" is possible.

If the conditions cannot be written down, algorithms are not available, so "ALGOrithmic Design" is not possible.

This is where AItect comes in, as it uses AI that is (potentially) capable of solving problems without writing them down.

Even that AItect also has its difficulties.

To solve a task that cannot be written down, it still needs to be evaluated.

Even if the evaluation criteria cannot be written down, the evaluation itself can be done.

Although we cannot explain what "like" means, we can tell whether we like it or not.

Using this mechanism, the AI can operate.

However, there must be a consistency in liking/disliking.

If every time it continues to dislike something previously liked, AI will not know what to make a decision on.

That AI becomes dysfunctional (...possibly like that HAL). And this is what often happens.

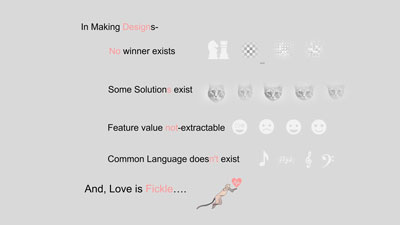

The human mind is fickle. Love is transposable.

This is an obstacle for AI.

It is OK if inning/losing or the degree of conformity is consistent, such as achieving victory in a game or reproducing the touch of a Van Gogh painting.

But when the subject is dependent on human emotions and moods, such as likes and dislikes, there is a great deal of fluctuation in evaluation.

And design is just that area.

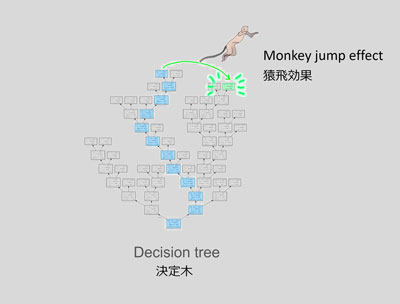

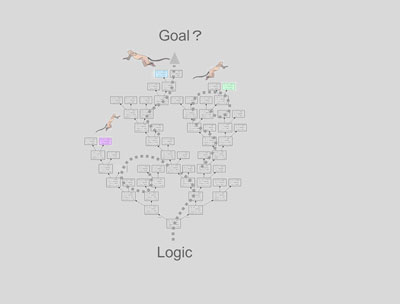

Even if you reach the end of the branch, the top of the decision tree, the branch from which you are advancing through a series of yes/no bifurcations, your "favorite" can suddenly jump in a flash to the next non-contiguous branch.

A leap that jumps over the path of logic, a leap that cannot be followed by logic. Action without context.

I call this” Monkey jump effect”.

The Monkey jump effect buries the accumulated efforts of decisions at each juncture in an instant.

It turns the decision tree into a useless dead tree.

Monkey jump effect is the first of the many difficulties of design AI.

AItect's difficulties do not stop there.

It is not the same as chess, shogi, or video games where the only answer=a winner exists.

Unlike distinguishing human faces, animals, and cars, there is no clear typology of forms to classify, either.

Nor are there any common rules or language, like musical notation or chords.

In this vague universe of design, where there are few clues and where anything is possible, it is necessary to find what is "good".

Will we ever be able to catch the monkeys flitting from branch to branch ?

HandIn – project Hand in Hand 2017 –

Is brain-AI collaboration possible?

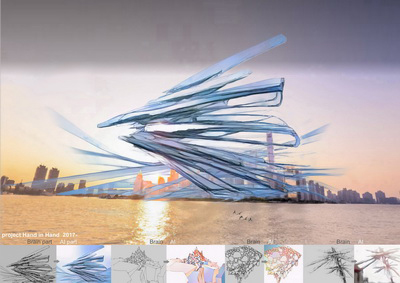

The pBM project is developing AI programs to achieve the desired objectives. At the same time, the lightweight HandIn project is also being implemented to use existing AI programs.

Since it is existing AI, anyone can use it. The point is which AI to use for which part of the design work.

The main focus of HandIn is using AI, not developing AI.

One example of HandIn is AI modifying manually drawn sketches.

The AI adds coloring and adjusts the lines of monochrome sketches. It makes no major changes to shapes. What to do is left up to the AI. The architect only selects the AI tendency. One click.

This AI does not engage in dialogues. It is one-way. But it does its work well. This is not a program that we have developed, but rather a website that anyone can use.

However, to a certain degree it is possible to “read” this AI’s algorithm. The AI appears to detect closed areas in the image, to adjust the overall color balance and gradation tendency, and so on. Up to a point, it is possible to infer what the AI is doing.

Therefore, this AI may be a gray box, rather than a black box.

By passing it through AI work, a hand-drawn sketch acquires a new character (one that it did not have at the time of sketching).

If the sketch was converted to 3D, a design could be developed even further.

This AI is extremely simply to operate, but the quality of the result depends on the quality of the original sketch. The work done by the AI does not surpass the imagination of the original sketch.

However, the AI can expand the imagination. It is an augmentation of physical capabilities by machine. More examples of this type of “capability expansion AI” will probably be seen in the future. (Although instead of expanding the capabilities of humans, they may replace humans.)

We are still taking small steps in collaboration between the brain and AI. But in the future they may lead to a giant leap (Neil Armstrong).

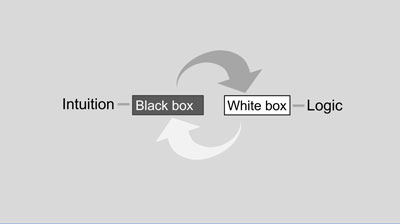

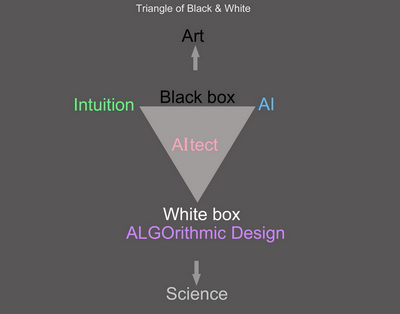

Black box + White box 20XX

In the end, white becomes black

ID and AD aim to externalize what the architect does in his mind. They write it as algorithms. They display answers to questions such as “Why is that line good” or “Why was that layout selected?”

The act of answering “why” questions is called science. Therefore, ID and AD are attempts to bring architectural design closer to science.

Make the act of designing, which up to now has been done through experience and intuition, a scientific act. That is the aim of ID and AD. By bringing design closer to science, make it possible to verify design performance.

If performance can be verified, it should be possible to discover methods that are even better.

But AItect does not write algorithms. There is no need to externalize judgments in the mind of the architect. The architect simply passes the requirements and examples of Yes and No to the program, and then obtains a good deliverable. There is no way to tell what judgments were made by the program, or how.

AItect is a black box.

Therefore, there is no science here.

Of course, the program itself is a product of science. But the program does not answer any “why” questions. It listens to what we say and then matter-of-factly displays a deliverable.

This is not science. It is closer to art. A good painting is good. There is no need to verify or analyze it.

Good things are good. Bad things are bad.

Here there is no intervention by a “why”.

Normal design is also a black box.

No matter how much the architect tries to explain it, he does not understand the real reason why he chose that line or this layout. The feelings that lie behind the words and the sudden onset of intuition cannot be explained.

In this sense, the architect is like AItect. Both are black boxes. Both learn and grow through experience.

Science + Art

Paths in the opposite direction actually lead to the same place

ID and AD tried to obtain better architecture by bringing architecture closer to science. They could be called white boxes, because they tried to answer “why” questions with rigorous logic.

The brain of the architect and AItect are the exact opposite.

They are black boxes.

Instead of answering “why” questions, they suddenly produce excellent architecture (assuming that they are good at what they do).

The attempt to obtain better architecture by bringing architecture closer to science involved the pursuit white boxes.

It is interesting to see how this attempt ended up with black boxes.

Further, although science, with its principles of verification, and art, which is produced through intuition, appear to be going in opposite directions, in fact they may be describing a loop, so that they are connected at their destinations.

That is also interesting.

The challenge of ID, AD, and AItect will probably help clarify this uncharted trajectory.

It is not necessarily true that AI will remain a black box forever.

With the advent of Explainable AI (XAI), which is capable of providing a rationale for its decisions, AI may once again be transformed into a white box.

AD + AItect

When machines have dreams, what will humans see?

As a result of pursuing (soft) science in the realm of design, arriving not at science but art.

As a result of seeking to acquire the logic (algorithms) required by science from the act of design, which depends on experience and intuition, arriving at AI without algorithms (at least not algorithms that can be understood).

While pursuing the extraction of white box algorithms from the black box of intuition, arriving at AI, a new black box.

This is a paradox. A strange but interesting paradox.

Is collaboration possible between the original black box (brain: intuition) and the new black box (AI: learning type).

During lectures on algorithmic design, there is a FAQ that comes up often: if programs generate architecture, what will architects do?

I always answer as follows.

Machines are better than people at solving complex problems with many intertwined conditions. In that realm, people are no match for machines. But people are the only ones who can create an image that does not yet exist. Machines do not have dreams.

Will this answer always be true?

Will the day come when machines have dreams?

Getting ready for that day will involve exploring that path of fortunate cooperation between the brain and machines.

This will require work in both areas: that of white boxes = the scientific approach = algorithmic design, and that of the two black boxes, toward collaborative methodologies = AItect.

In the same way that our left brains and right brains handle different functions and collaborate to deliver outstanding performance.

Related Lectures (Recent ones only)

30min.Lec. - Architect - Machina X Mind - Quest for Enigma - II 2022 / Taiwan

Works & Concepts - Architect ver.

"Machina X Mind - From the Brain, From the Hand"

03 May 2022 16:15-17:40

Lecture / English

Tamkang University (Taiwan)

Advanced version of "6 min. short/dens Lec. - Quest for Enigma - I "

17min.Lec. - Architectural Education - From the Brain, From the Hand 2022 / Taiwan

Education & Student Works - University Prof. ver.(Architectural course)

"Machina X Mind - From the Brain, From the Hand"

03 May 2022 16:15-17:40

Lecture / English

Tamkang University (Taiwan)

Ultra-short, high-density 6 min. Lecture 2022 / Tokyo

“ AIS AAC - The externalities of design "

26 Feb. 2022

Online conference / English

Keynote speech 2020 / Albania

/ In the Era of Paradigm Shifts

POLIS University

TAW Tirana Architecture Week 2020

"Science and the City / In the Era of Paradigm Shifts"

28 Sept. – 11 Oct. 2020

Lecture 2019 / Tokyo

JST CREST HCI for Machine Learning Symposium

The University of Tokyo

JST CREST HCI for Machine Learning Symposium

First Open Symposium 2019

"Interaction × A.I. - JST CREST HCI for Machine Learning Symposium"

Koshiba-hall, Hongo Campus, The University of Tokyo

11 Mar. 2019